|

|

||||

|

By Jo Nova Right about now the Greens should be rushing to reverse all the plastic bansNow we know that CO2 is aerial fertilizer and feeds the world, but this study highlights the crazy unscientific randomness of environmental policies chanted by the same people who say “follow the science”. It turns out paper shopping bags produce five times as much CO2 over their lifetime as plastic HDPE bags do. Apparently, plastic bags might strangle a turtle, but in the mind of a dedicated Green, paper bags could be causing the sixth mass extinction. Oh the dilemma? A new study in Environmental Science and Technology looked at 16 applications of plastics in modern life found that in 15 of them, the plastic version produced fewer emissions than the paper, concrete, steel, glass or aluminum sort. And these 16 applications accounted for about 90% of global plastic volume. It seems that with paper bags people often “double bag” their groceries because the bags are prone to breaking, and in the end, in landfill, the paper waste is degraded into methane. THE DAILY CHART: PLASTIC MADNESSSteven Hayward, Powerline So we went and banned plastic straws and plastic bags in much of California and elsewhere because they are made from fossil fuels and a solitary turtle was once found snorting fentanyl through a plastic straw, or something. In any case, Greta/Gaia was displeased, so plastic products had to go. Well guess what: the substitutes for plastic products mostly produce higher greenhouse gas emissions than plastic. Not by just a little but by a lot. If the Greens gave a toss about CO2 emissions, you’d think they’d be pretty careful to make sure their own plans were not wrecking the planet. And, ipso facto, oops, if they did the wrong thing, you’d think they’d want to fix that like all life on Earth depended it? Unless, of course, they were attention seeking totalitarians who just wanted to boss people around for the sake of it? This is not the first time studies like these have surfaced. We know from what the EcoWorriers won’t do, that they don’t give a damn about carbon emissions. It’s all a big show of virtue signalling, a grand theatre where they pretend to care, and their friends pretend to be impressed. Only people who want to fry coral reefs would choose paper bags over plastic ones, eh?

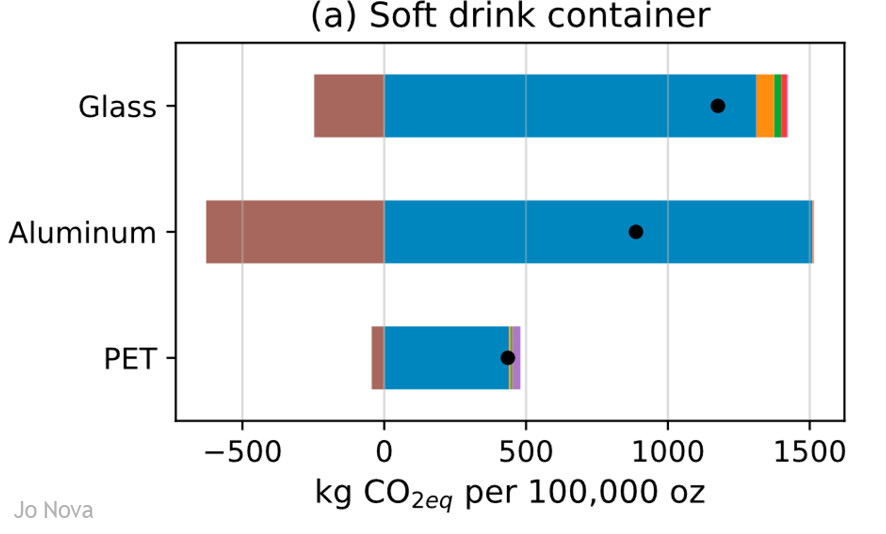

Even though we don’t recycle PET bottles much, they produce one half the emissions of cans, and one third of the emissions of glass: PET bottles have the lowest emissions impact because of their low weight and low energy intensity during production. In comparison, aluminum cans release twice the emissions of PET bottles, and glass bottles release three times the emissions. PET has the lowest recycling rate (Table S3) among the three alternative containers and the highest emissions when incinerated at end of life (WtE). However, in this case, the production stage dominates the overall emissions, and here, PET has a much lower impact than glass and aluminum (Figure S3).

https://pubs.acs.org/doi/10.1021/acs.est.3c05191 Could a member of Greenpeace even speak the words? PVC pipes are better for climate change (if you care about CO2):

Pet food in tins produces three times the emissions of pet food in little plastic pouch things:

Even plastic milk bottles produce less emissions than cartons do, and every little bit matters as they say:

Obviously Greens will be bragging about the plastic fuel tank in their hybrid cars, right?

…and their acrylic carpet. Save the whales, buy linoleum?

The whole study finds virtually no reason to swap plastic goods for something else: We conclude that applying material substitution strategies to plastics never really makes sense. This is because plastics’ inherent properties─strong, lightweight, easy to shape, customizable, and comparatively low-GHG emissions─make it the preferred material for minimizing emissions across most products. h/t Bally REFERENCE

By Jo Nova Politicians are supposed to care about the voters, but trillions are being spent on a issue that voters don’t give a toss about. Who are politicians serving exactly, because it isn’t the voters. There is no grassroots clamoring demand for “climate action” and there never was. Could it be that politicians are more worried about what the banker cartel think, and the media moguls, or President Xi, or are they just carving out a post-political job for themselves at the UNEP or the WEF? The Wall Street Journal reports on a survey that shows even young voters know almost anything is more important than climate change. Biden Is Spending $1 Trillion to Fight Climate Change. Voters Don’t Care.By Amrith Ramkumar and Andrew Restuccia,Wall Street Journal A Journal poll, which surveyed voters in seven swing states in March, found that just 3% of 18-to-34-year-old voters named climate change as their top issue, with most citing the economy, inflation or immigration. That is roughly in line with voters of all ages, 2% of whom cited climate change as their top issue.

This has been this way for years. In 2015 only 3% of US voters thought climate was the most important issue. And let’s not forget all these surveys are done on people who never see a skeptical expert on TV or a real documentary (like Climate: The Movie). They don’t hear that carbon dioxide was higher for most of the last half billion years, or that “climate change” causes record grain yields, and saves 166,000 lives a year. Most of the 18 to 35 year olds have been fed the climate diatribe from school — but even they don’t believe it. If they thought the Antarctic icecap was really about to collapse, they’d rate climate change up there with inflation. Given the vapor thin faith of the young and impressionable, the whole climate charade is a house of cards. One good opposition leader just has to point out the costs and start the debate and it’s over. Everyone wants to change the climate until the moment they have to pay for it. A survey from the Climate Communications unit at Yale suggests the climate gravy-train isn’t buying votes either. 55% of US voters don’t think the trillion dollar Inflation Reduction Act will improve their health. Two thirds don’t think it will help their family. 54% of voters don’t think it will help their children or grandchildren. If you were a politician trying to win votes, this is not how you’d spend a trillion dollars. Fewer than half of registered voters think the Inflation Reduction Act (IRA) will help them or the country.

And therein lies the grand mystery of democracy. We basically have a giant government industry spending a trillion dollars on an issue people don’t care about and in ways that most voters don’t think will help them, their children, the poor, the economy, jobs or national security. And yet the money flows. Why is that? Just ask “Who Benefits?” So the good news that the young can see through this, despite the wall of propaganda. The bad, awful and terrible news is that the government doesn’t care what the voters think. h/t Peter Image by Meranda D from Pixabay

By Jo Nova It’s just another day in the death of the early 21st Century EV bubbleThe fantasy of battery powered vehicles that also fix the weather was foisted upon the people by Big Government. But all the regulatory wands in the world, and even billions in free gifts don’t make a market appear when the product is a dog. EV’s are meant to be storming the market on their way to domination. But in the UK the market share of EV’s rose only 3.8% last month but the whole car market grew by 10% — so EV’s are in danger of becoming a shrinking part of the UK car fleet. Plug-in hybrids saw a 37% increase. The EV experiment has gone so very wrong. Last year Ford was the number 2 EV brand in the US, but it was hit with the $4.5 billion dollar black hole of fiscal carnage, losing $38,000 on every single EV. Obviously, something had to change, and now months later, Ford is abandoning plans to bring in two new EV models, and retool their EV manufacturing plants. Instead, it is shifting to hybrid vehicles — copying the Toyota plan. EVs have crashed into the hard reality that Americans just don’t want themDavid Blackmom, The Telegraph Something big is happening in the US market for battery electric vehicles (EVs), and it isn’t positive for the industry that makes them, or for the Biden administration’s subsidised dreams. Ford suddenly puts the brakes on EV models, and factories, and is copying Toyota which was mocked and ridiculed for focusing on hybrids instead of the purist EV’s: …on April 4, Ford Motor Company put the icing on this cake of electric carnage with an announcement that it is pulling back from plans to introduce two new EV models, an SUV and another pickup to tag along with the F-150 Lightning, and delaying major investments in building and “retooling” EV manufacturing plants in the US and Canada. Ford says that after three years of making massive investments in new plant and equipment needed for the production of its F-150 Lightning and electric Mustang Mach E models, it will now focus on developing hybrid options across its entire model lineup. This places Ford on a strategic path similar to Japanese giant Toyota, which has become an object of scorn and ridicule from the climate alarmist left and globalist policymakers in the US and Europe for its stubborn, ongoing focus on making and successfully selling hybrids rather than pure EVs. Meanwhile Fisker, a new EV startup, is pausing production for three weeks, and is on the verge of bankruptcy. Things are so desperate they have dropped the price of the “Ocean Extreme” from £58,000 to £44,000 in the hope of staving off the grim reaper. Not surprisingly, after sales support is “not guaranteed”. Resistance is growing downunderThe Guardian is still giving free adverts for EVs and pretending its news. They report that there are now 180,000 EV’s in the Australian market, but don’t mention that that is a mere 1% of the total car fleet. In Australia the EV market is in its infancy, but people already seem to realize “they catch fire” a lot. In the driest continent with the lowest population density and most expensive electricity on Earth, range anxiety and fear of fire is real thing. Far from being excited about being offered low emissions cars, there are signs from middle Australia that the people are unimpressed already. Dissent is so strong, people in Strata-title buildings have to struggle for 12 months to even get one EV installer added to the building. And when they do succeed the charging spot is often placed in the furthest part of the carpark, out in the garden, lest it combust. One contractor has quoted to install an EV charging outlet on 100 buildings but only two have taken the job up. “Resistance is growing” he says: The Battle for EV Charging in Strata Title Buildings Continues in AustraliaIt has taken somewhere near 12 months — 3 Body Corporate Committee meetings, dozens of emails, an Extraordinary General Meeting and an Annual General Meeting to get to this stage — a single 10amp GPO. To date, he has quoted to install the electrical backbone infrastructure in over 100 buildings. It works out at roughly AU$1000 per parking bay. Only two buildings have taken up the quotes. He believes that body corporates are looking for more reasons to resist the change. “There appear to be more and more rules and regulations,” he says. “In apartment blocks there are so many people to deal with: committees; on site management. I think the resistance is growing. Some are using fire risk as a means to stop installs. Quotes have become more expensive and more complex as I have had to add fire extinguishers, smoke detectors, fire blankets and a stop/start button. Safe EV training has to be provided for those responsible in the building. More work, more cost.” And the unsold cars pile up in the US:

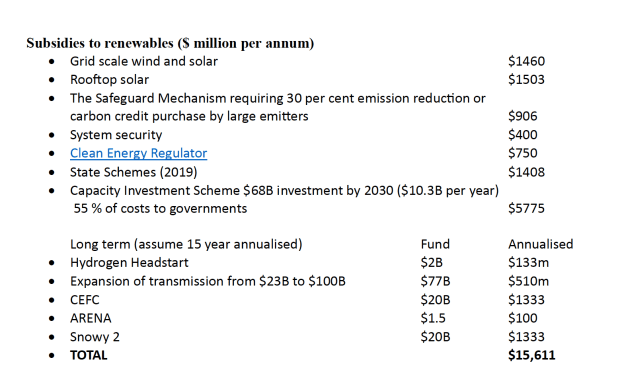

… Dear Australians, people are sneaking into your house and stealing your money every year. You may have no idea because the theft comes in one thousand little instances, hidden within labels like “electricity bill”, “peas” and “soccer fees”. It’s also hidden in your income tax. Pagan fantasy plans to stop storms and hold back the tide are being funded by you, whether you like it or not. Solar panel installations are partly paid for by their neighbors who don’t have solar panels, it’s hidden in their electricity bills. When a wind farm is built out past the Black Stump, shareholders of the wind farm don’t pay for the high voltage line to connect themselves to the grid, you do. When the new unreliable generators wipe out the midday profits from the old reliable plants, the old essential plants still have to pay their capital costs, insurance and staff. So they just have to charge more for the hours they do run. The new vandal plants make the old reliable ones more expensive than they would have been. (See Stacy and Taylor) You pay that bill too. When the soccer club pays higher electricity charges for the night lights they pass that on to your eight year olds club fees. And so on and so forth for the peas, the cheese, the ham and everything that’s heated or cooled or moved in the supermarket. If a government official knocked on your front door and demanded the “Climate-changing-cash” in a single payment, there would be hell to pay. Alan Moran has added up the numbers and it works out to $15.6 billion a year in Australia, and that’s about $600 per man, woman, and child. The grim cost of firming up solar and wind

So lets have a referendum on whether we should be trying to change the weather. Let’s ask the people if they would rather have cheap electricity and a trip to Bali, or knock 0.0 degrees C off global temperatures in 2100AD? Would they rather buy a new fridge or pretend they can reduce the floods coming in one hundred years time? We know 98% of the public say they “believe” in climate change, but we also know the renewables industry will never ever campaign for a referendum to ask what the people want. They know, we know, and the politicians know — the people don’t want to buy “the transition”. Burglar image by Sammy-Sander from Pixabay | Thieves online image by Hyperslower from Pixabay By Jo Nova There is such a glut in solar panels, the Financial Times reported that people in Germany and the Netherlands are using them as cheap garden fencing, even though the angle is not good for catching the sun. Though given that there is also a glut of solar power at lunchtime this is probably a “good” thing. Great time for the Australian Government to spend a billion dollars setting up a giant solar panel production industry, eh?With exquisite timing the Australian Labor government has just announced a Solar Sunshot for Our Regions. It our Prime Ministers ambition for us to be a “Renewable Energy Superpower” twenty years too late. One third of homes in Australia already have solar panels, but only 1% were made here. The NSW State government will also lob $275 million to support the embryonic industry and workers, most of whom will presumably be doorknocking to give away the panels with lamingtons. After we finish building garden fences, we might be using them to build sheds and cubby houses. The big solar rush is over…The global frenzy to install solar panels has suddenly flattened out last year when it was supposed to be launching for orbit. The IEA estimated the world now has about 800 GW of solar panel plants. But demand for solar panels this year is only expected to be 402GW. The glut is so bad, the whole global solar panel industry could take half the year off to play golf and no one would notice. In the media, everyone is saying “China has flooded the market”, but for some reason, no one wants to mention that the demand curve has suddenly slowed. The CCP has bet big on renewables sales and was probably expecting that rapidly rising curve to take off. Instead as interest rate rises clamped down on “luxury” spending people ditched their plans to install solar PV. The glut should be no surprise to any investor. The over supply has been recognised since January. And any serious investor in solar PV would know that solar stocks around the world were down 40% in the first three quarters of last year. The Australian Prime Minister has a whole team of researchers and Ministers and none of them have even hired a high schooler to google the news on the solar industry? China has flooded the market with so many solar panels that people are using them as garden fencingHuileng Tan, Business Insider China’s manufacturers are pumping out so many solar panels that the resulting global glut has caused prices to tank. Solar panels are typically installed on rooftops, where they can capture the most sunlight — but there’s so much excess supply that some people are putting them on fences. This also saves on pricey labor and scaffolding costs required for roof installations, FT reported. Fences covered in solar panels are also starting to take off in the UK, North America, and Australia. Solar-panel supply globally is forecast to reach 1,100 gigawatts by the end of this year — three times more than demand, the International Energy Agency wrote in a report released in January. If China makes 80% of the worlds solar panels, and if solar energy is so cheap and efficient, why doesn’t China just keep those panels and use them itself?

You can help in the information war… UPDATE: The specific link to Climate: The Movie

By Jo Nova This tells us everything we need to know about modern Western civilization. A blimp with wings.These pufferfish of the sky could be the ugliest, most absurd planes to take to the troposphere. They are emblematic of the era we live in. Wind turbines destined to rot in the ocean are so big now they can’t even fit on a truck, so someone is planning a plane specially for them. It will be 100 feet longer than a Jumbo jet but carry no tourists, except the fibreglass kind that torment whales, deafen porpoises and vandalize fine electricity grids. The whole point of these machines is a quest to appease the weather Gods one hundred years from now. Presumably these will run on fairy dust or fermented tofu. The Flying white elephants could “hit the sky” in four yearsTo be clear, all that was announced two weeks ago was that Radia has “plans” to make these aircraft and wants $300 million dollars. Presumably the photos here were made by ChatGPT or equivalent. Radia’s WindRunner to be the world’s largest aircraft ever builtby Rizwan Choudry, Interesting Engineering The WindRunner’s colossal dimensions dwarf even the most iconic commercial aircraft. Measuring an astounding 356 feet long, with a height of 79 feet and a wingspan of 261 feet, it outstrips the Boeing 747-8’s length by 106 feet. To put things in perspective, the Windrunner is almost as long as an NFL football field. I Correction: The Business Insider says: Radia’s plane has a cargo bay volume of 272,000 cubic feet — 12 times that of a Boeing 747-400F … Let’s not forget the sole aim and purpose of the plane, the factories that make it, and the entire load it carries is to reduce human fossil fuel use.

According to Olivia Murray of American Thinker, it will supposedly run on renewable fuel: Radia promises that this plane will run on “sustainable aviation fuel” instead of traditional jet fuel—but what is SAF exactly? Well, SAF is just a type of “biofuel” that has apparently met certain criteria to be legally-labeled as “sustainable.” So, just as long as you ignore all the cleared forests and prairies to make way for the taxpayer-subsidized corn and soy enterprises to grow the product to make the “fuel,” and you ignore the devastation caused by corporate (mono)agriculture, then maybe you can delude yourself into believing this is a more environmentally-friendly option. SAF is “sustainable” in the same way that wind turbines are sustainable—you have to ignore the impact on migratory birds, the petroleum-based resins used to manufacture the fiberglass blades, the toxic refrigerants in the turbine house, the petroleum-based lubricants for the machinery, etc., if you’re to believe the lie. They could always run it on solar power and electric batteries if they don’t mind replacing the battery every three weeks (or maybe every time it flies). The plane will apparently need 6,000 foot runways to take off and land. Radia hope that onshore turbines will also be delivered to far flung and remote sites where it is hard to deliver wind turbine parts now. (Since those sites are often mountainous and lacking in long international airport runways, who knows, perhaps the blades can be air-dropped?) UPDATE: The Wall Street Journal says they will have to build a new dirt runway for each project. Having waited seven years to reveal their plans they may have missed the renewable bubble by six months. Bad luck eh? Investors are fleeing after Siemens discovered that instead of being more efficient, the bigger blades were a maintenance nightmare. Even insurance companies are balking at paying for all the cable breaks. h/t Bally

By Jo Nova Strange sightings of Batman and Robin have been occurring in LondonThousands of ULEZ (Ultra Low Emission Zone) cameras have been installed to record numberplates of offending older cars so their owners can be charged £12.50 for daring to drive a non-compliant car in London. This usually means any diesel car made before 2014 or a petrol car made before 2006, so the fines especially hurt low paid workers, students and retirees. The two masked crusaders have been seen helping the local bat population by installing homes for them in front of ULEZ cameras. Since bats are a protected species (unlike motorists) once the bat boxes are installed they can not be tampered with nor disturbed by law. Batman and Robin helpfully also place legal notices on the poles so workers coming to fix the cameras know they risk a six month jail sentence or an “unlimited” fine if they so much as touch the bat box. The bat boxes cost £10. Bat roosts have legal protection: Vigilante anti-ULEZ campaigners hang bat boxes on cameras to stop engineers fixing themAdam Toms and Rom Preston-Ellis, The Mirror The £10 animal homes have been spotted attached to ULEZ devices in Chessington, Kingston upon Thames and North Cheam. ULEZ opponents say they are “positively contributing to London’s biodiversity and ecosystem”. One said: ”I’m sure whoever is behind it is extremely grateful to TfL for providing the poles to house this protected species.”

Photos in the collage come from this twitter report, and the one above. h/t Bally

|

||||

|

Copyright © 2024 JoNova - All Rights Reserved |

||||

Recent Comments